Unmap data from an image#

Sometimes we’d like to rip data (i.e. the underlying digits) from a pseudocolour (aka false colour) image. For example, maybe you found a nice map or seismic section in a paper, and want to try loading the data into some other software to play with.

The unmap.unmap() function attempts to do this. Keep your hopes in check though: depending on the circumstances, it might be impossible. The following things will cause problems:

It’s not a greyscale or perceptually linear colormap. Getting data from greyscale images is easy, it’s colours that cause problems. And I think soon I’ll have a workflow that works especially well on perceptual colourmaps.

You don’t know the colourmap and the colourbar is not included in or otherwise with the image. I don’t (yet) know of a way to reliably figure out the colourmap if you don’t have it or know it.

You don’t have a lot of pixels. Small images are hard to rip data from. We’d always like more pixels.

There are a lot of annotations. Usually these get in the way of the data.

The image is lossily compressed. Most PDFs contain JPEGs and JPEG is lossy. This means the colours are a bit garbled, especially around abrupt edges (like annotations!).

The image has dithering. If the number of colours in the image has been reduced at some point, there’s a good chance it has been dithered.

The image has hillshading or specularity. Cute 3D effects add another layer of complexity… but we can still have a go.

It’s a 3D perspective plot. You might be able to recover the data from what you can see, but 3D perspective views add another type of distortion that I daresay could be undone, but not by me.

The image is poor. If it’s a photo or scan of a paper document… well, now you ‘ve got the unknown transform from the data to the image, then the unknown transform of the image to the physical plot, then the unknown transform of the plot to the image you have. I mean, come on.

We might be able to cope with two or three of these issues, but if they gang up on you, you might be out of luck. I’d love to hear how you get on!

As an aside, notice how the list above doubles as a set of requirements for making terrible scientific images!

Get images from the web#

Let’s write a little function to load images from the Internet and transform them into NumPy arrays.

import numpy as np

from PIL import Image

import matplotlib.pyplot as plt

from urllib.request import urlretrieve

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

def get_image_from_web(uri):

f, _ = urlretrieve(uri)

img = Image.open(f).convert('RGB')

rgb_im = np.asarray(img)[..., :3] / 255.

return rgb_im

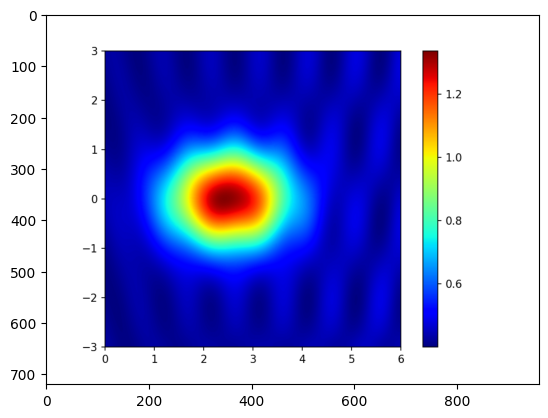

# An image from Hugh Pumprey's blog post Colours for Contours, licensed CC BY

# https://blogs.ed.ac.uk/hughpumphrey/2017/06/29/colours-for-contours/

uri = 'https://blogs.ed.ac.uk/hughpumphrey/wp-content/uploads/sites/958/2017/06/jeti.png'

img = get_image_from_web(uri)

plt.imshow(img)

<matplotlib.image.AxesImage at 0x7fcb0029d3f0>

An easy image#

Let’s start with that image, it looks approachable:

It’s large.

It’s a PNG, so losslessly compressed.

No hillshading.

It contains the colourmap, and we know from the blog that it is

jet

And when Hugh used it on his blog, he provided lots of other plots that we can compare to.

from unmap import unmap

data = unmap(img, cmap='jet')

plt.figure(figsize=(10, 6))

plt.imshow(data)

plt.colorbar()

plt.savefig('../_static/Hugh_Pumphrey_CC-BY_unmapped_1.png', dpi=200)

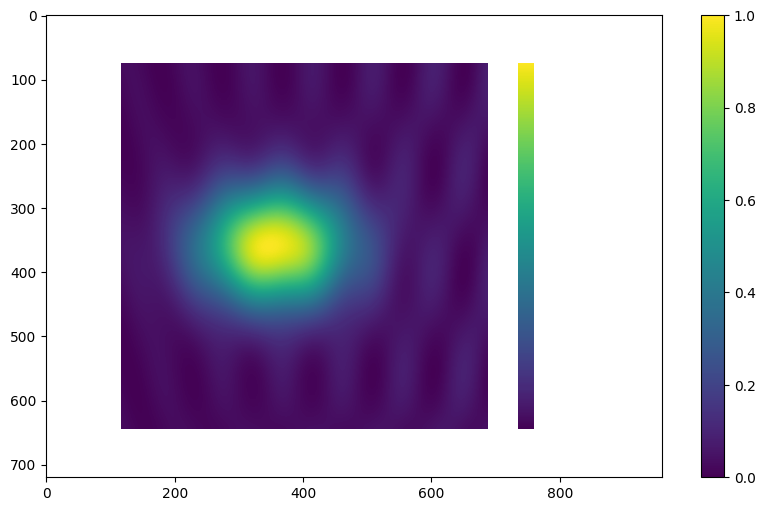

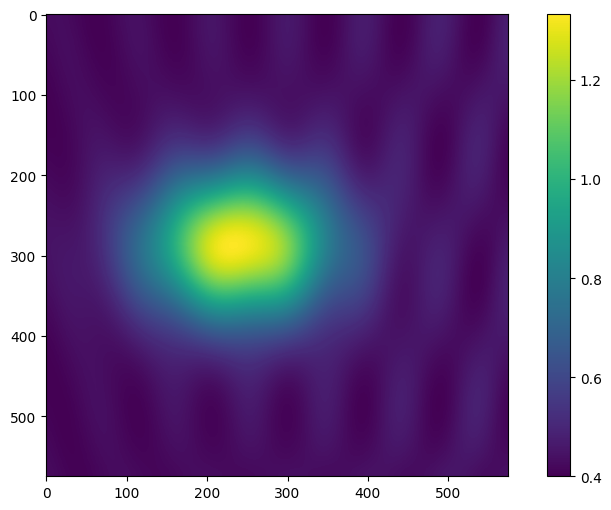

Notice that the data is there, but so is the colourbar. We can use the crop argument to deal with this, getting the coordinates we need (left, top, right, bottom) from mousing over the image in an image editor such as GIMP.

Also, the new data’s colourbar (on the far right) shows that our dataset ranges from 0 to 1, but we can see from the colourbar in the original plot above that it should range from about 0.4 to 1.333. So let’s add the vrange argument to deal with this.

data = unmap(img, cmap='jet', vrange=(0.400, 1.333), crop=(115, 72, 690, 647))

plt.figure(figsize=(10, 6))

plt.imshow(data)

plt.colorbar()

plt.savefig('../_static/Hugh_Pumphrey_CC-BY_unmapped_2.png', dpi=200)

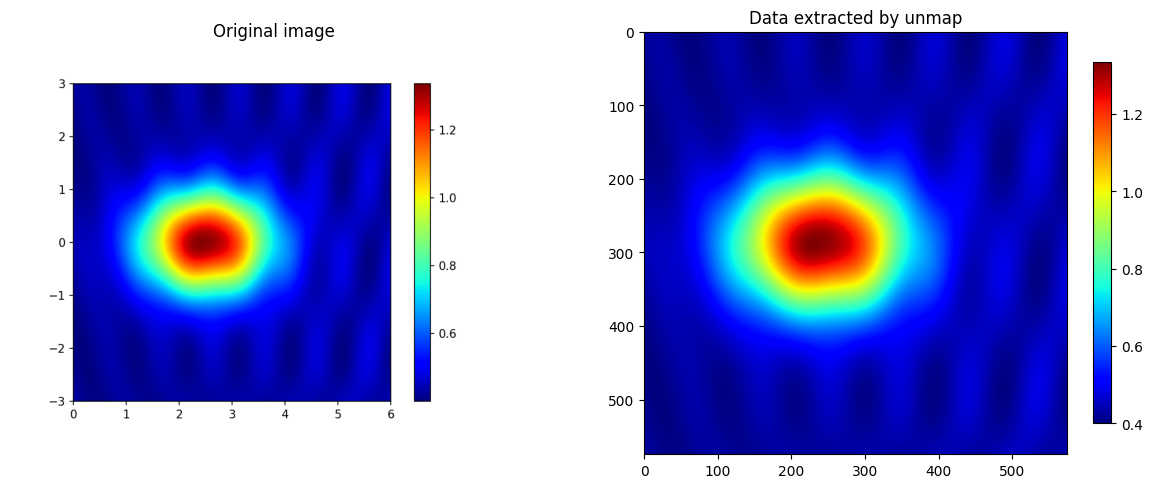

We can plot this side by side with the original data, using the same colourmap, as a rough quality check:

fig, (ax0, ax1) = plt.subplots(ncols=2, figsize=(15, 7))

ax0.imshow(img)

ax0.axis('off')

ax0.set_title('Original image')

im = ax1.imshow(data, cmap='jet')

ax1.set_title('Data extracted by unmap')

plt.colorbar(im, shrink=0.67)

plt.savefig('../_static/Hugh_Pumphrey_CC-BY_compare.png')

A more challenging image#

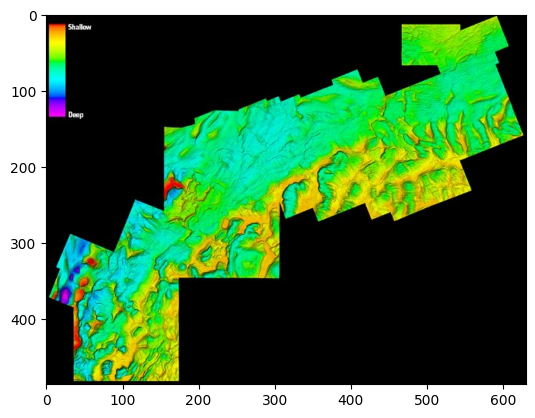

Let’s try an image from a tweet by @seis_matters (Chris Jackson):

img = get_image_from_web("https://pbs.twimg.com/media/ELX9zxIWoAId3FT.jpg")

plt.imshow(img)

<matplotlib.image.AxesImage at 0x7fcae66496f0>

Awesome. This image is perfect for testing our function, because it has various challenges:

It’s relatively small for the amount of detail it contains.

It’s a JPEG, which almost certainly means data loss.

It has hillshading, which overlays the colourmap information.

It doesn’t have a proper scale or coordinates, but we can’t do much about that.

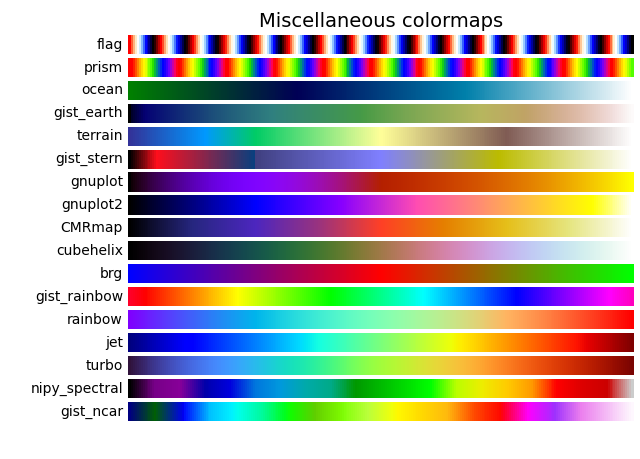

Let’s try to rip data from it… Looking at matplotlib’s colormaps…

I think the closest is 'gist_rainbow' so let’s try that:

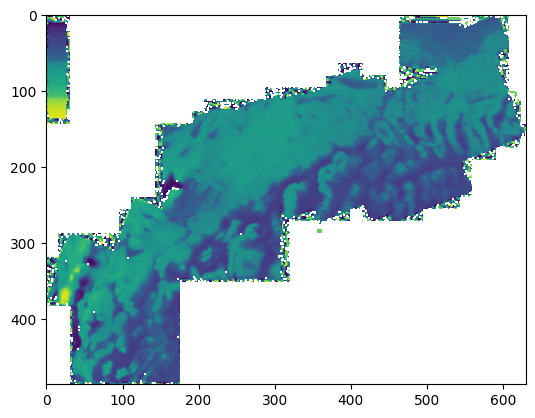

data = unmap(img, cmap='gist_rainbow', background='common', hillshade=True, threshold=0.4)

plt.imshow(data)

<matplotlib.image.AxesImage at 0x7fcafb9fead0>

Not all that great, but it’s a start. It looks like we’ll need to tidy up the edges a bit; they are all ragged because of JPEG compression artefacts.

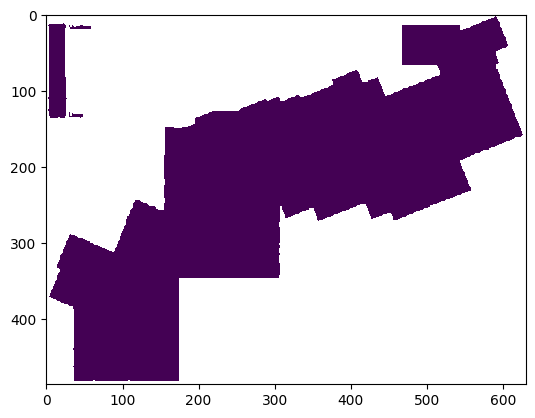

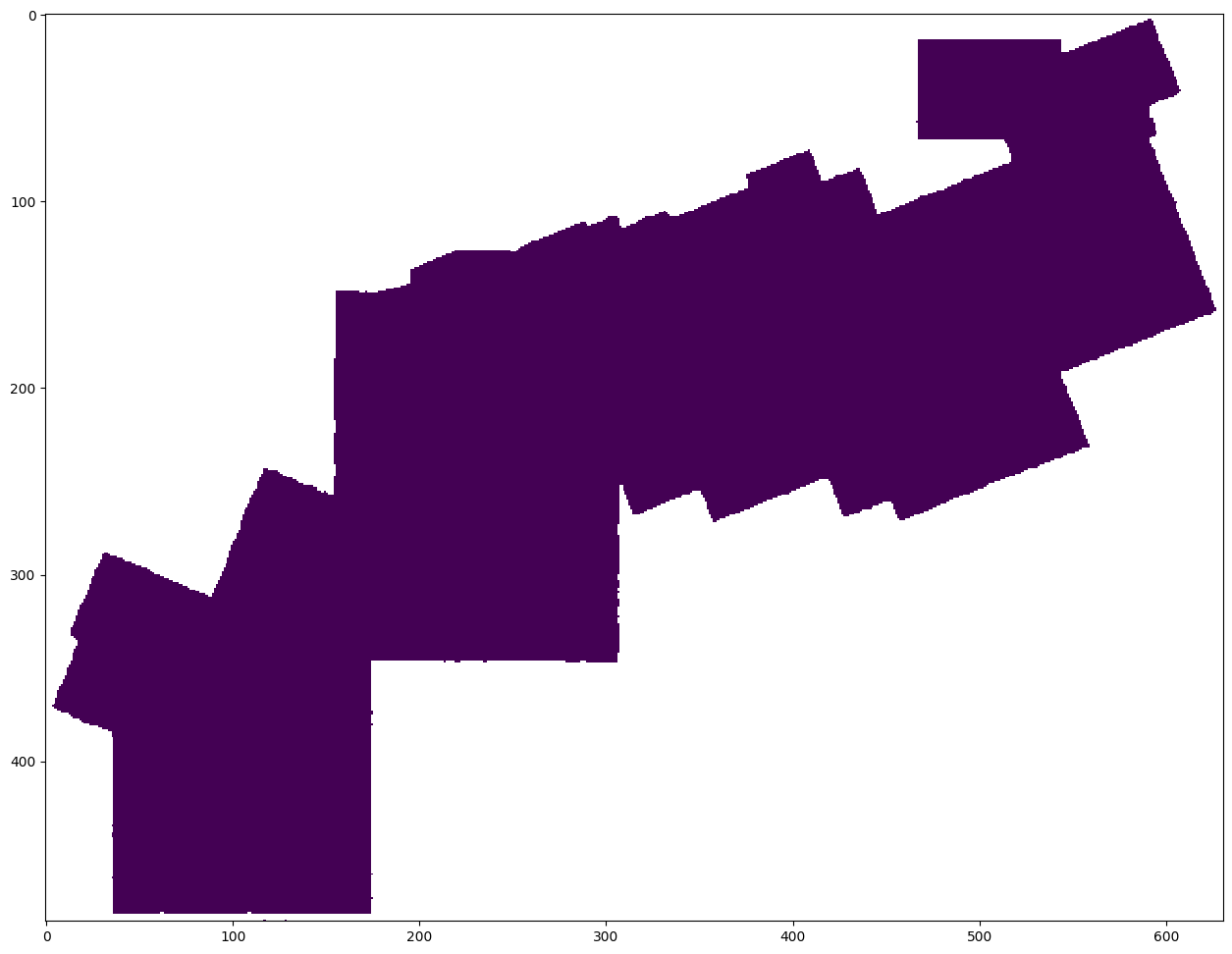

Let’s make a mask from the black pixels in the original image:

mask = np.ones_like(data)

# Mask out pixels with small R+G+B values (the dark background).

threshold = 0.1

mask[img.sum(axis=-1) < threshold] = np.nan

plt.imshow(mask)

<matplotlib.image.AxesImage at 0x7fcae66a3d00>

And blank out the colourmap area:

mask[:150, :75] = np.nan

plt.figure(figsize=(18, 12))

plt.imshow(mask)

<matplotlib.image.AxesImage at 0x7fcae68680d0>

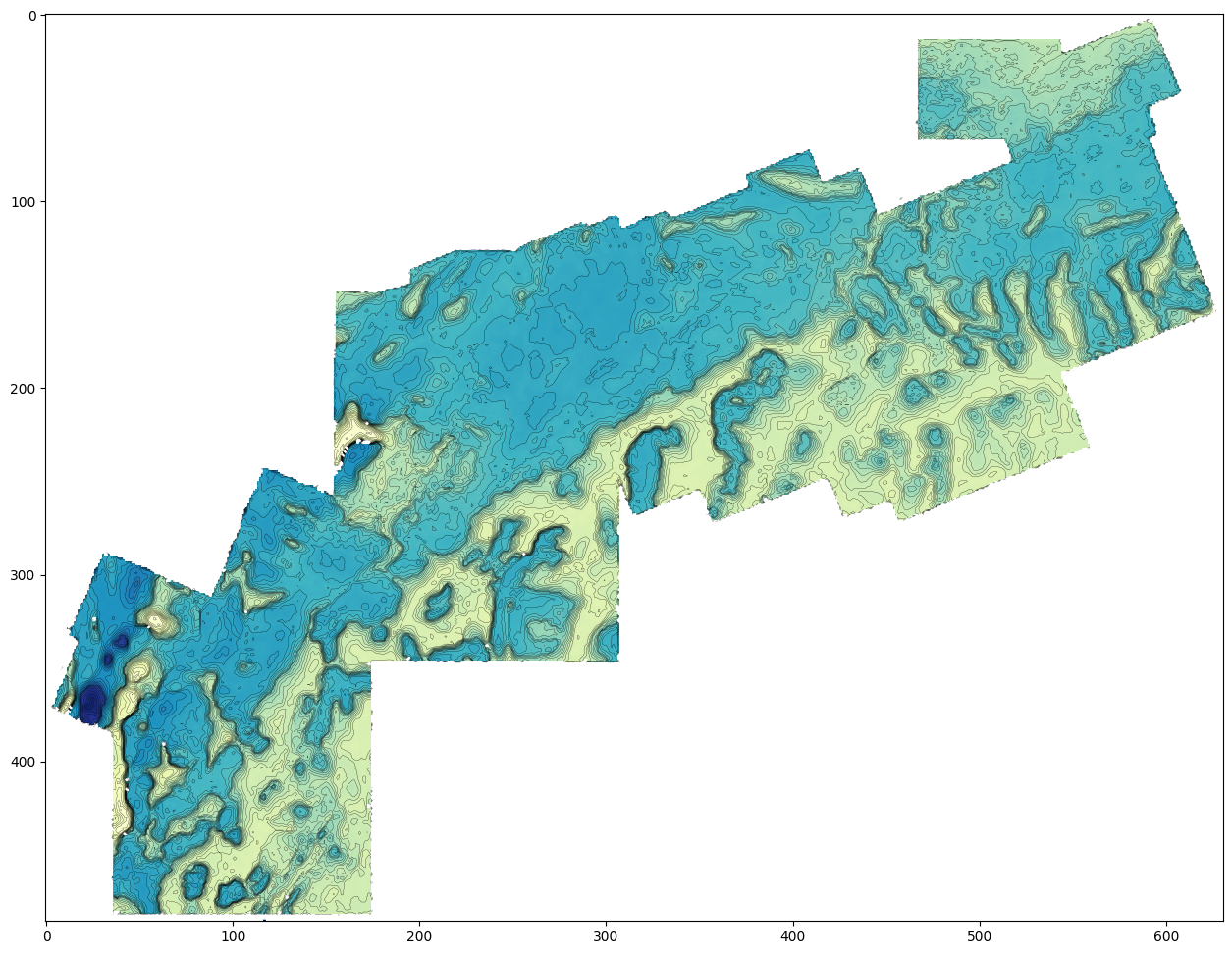

Now we can use this mask to pretty up our result:

data *= mask

plt.figure(figsize=(18, 12))

plt.imshow(data, cmap='YlGnBu')

plt.contour(data, levels=np.arange(0, 1, 0.025), colors=['k'], linewidths=[0.2])

<matplotlib.contour.QuadContourSet at 0x7fcae679ee00>

You can still see the JPEG compression artefacts in the data. We could apply some smoothing, but now we’re getting into interpretation territory…

Good luck with your data liberation quest!